The rush toward AI in CI

I see many teams rushing to plug AI Test Agents directly into CI/CD and expecting magic.

Here’s my honest opinion after actually using Playwright Planner, Generator, and Healer in production.

The core truth

Planner and Generator are instruction-driven tools.

They operate based on:

- Explicit instructions

- Repository context

They generate or update specs only when guided — not continuously or blindly.

If that’s the case, why put them in CI at all?

Why not run them locally, generate specs once, and let CI do its job?

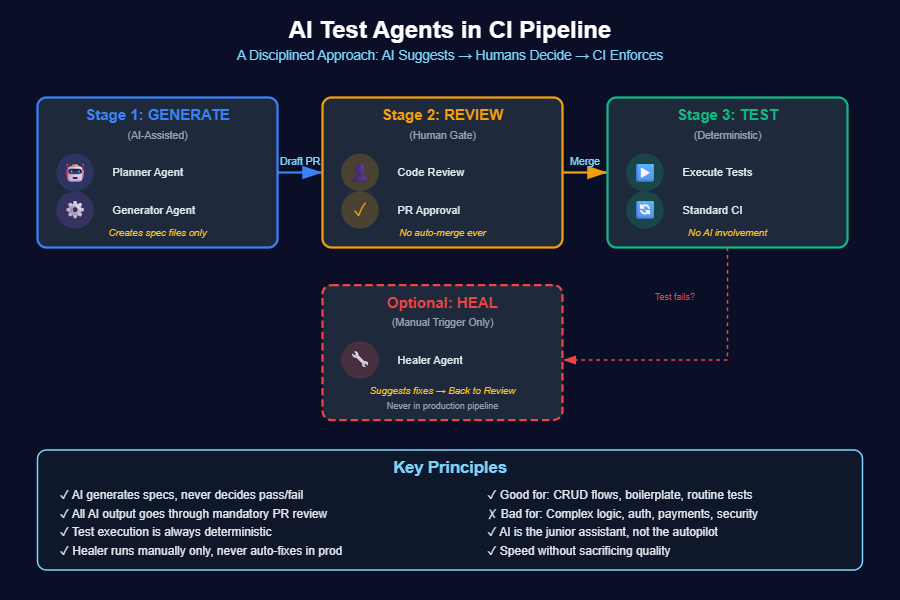

Visual overview

The short answer

Yes — for many teams, local-only agent usage is enough.

A clean and valid setup looks like this:

- Generate specs locally

- Review them

- Commit them

- Let CI run deterministic tests

This setup is simple, predictable, and works well for small to medium systems.

So when does CI integration actually make sense?

CI integration is not about necessity.

It’s about:

- Scale

- Consistency

- Control

I use agents in CI only for controlled spec generation, never for release execution.

How I structure AI agents in CI

Agents never run inside release-critical execution paths.

Instead, I separate responsibilities into three clear stages:

stages: [generate, test, heal]

generate:

script:

- npx playwright test --agent=planner

- npx playwright test --agent=generator

rules:

- if: $CI_MERGE_REQUEST_ID

test:

script:

- npx playwright test

heal:

when: manual

allow_failure: true

script:

- npx playwright test --agent=healer

What this structure enforces

- Planner & Generator only create or update spec files

- Generated specs go through PRs and human review

- All specs (manual + AI-generated) run via

npx playwright test - Healer exists, but only as a manual, non-gating job

Guardrails I never break

❌ AI can draft YAML or pipeline changes, but it can never auto-merge

❌ AI can propose test code, but humans always approve

❌ Healer gets one attempt, then escalation to engineers

❌ No self-healing in production or release pipelines

AI suggests. CI enforces. Humans decide.

The real insight

CI doesn’t need AI.

Teams do.

Where AI test agents actually help

AI Test Agents are excellent accelerators for:

- Boilerplate specs

- CRUD flows

- Selector drift after UI refactors

But they are terrible autopilots for:

- Business logic

- Authentication & payments

- Security-sensitive flows

- Release decisions

Bottom line

If your product is small and stable, local-only agent usage is enough.

CI integration makes sense only when you need:

- Standardized generation across teams

- Traceability via PRs

- Controlled and repeatable workflows

This isn’t about hype or replacing engineers.

It’s about using AI deliberately — without breaking the guarantees CI/CD exists to provide.