The old automation cycle

The traditional automation workflow looked like this:

→ Write test cases

→ Script manually

→ Debug selectors

→ Fix broken tests after every UI change

This cycle consumed most of a test engineer’s time.

What changed?

Playwright v1.56 introduced AI Test Agents that handle a large part of this work.

Not by guessing.

Not by magic.

But through controlled, auditable tooling.

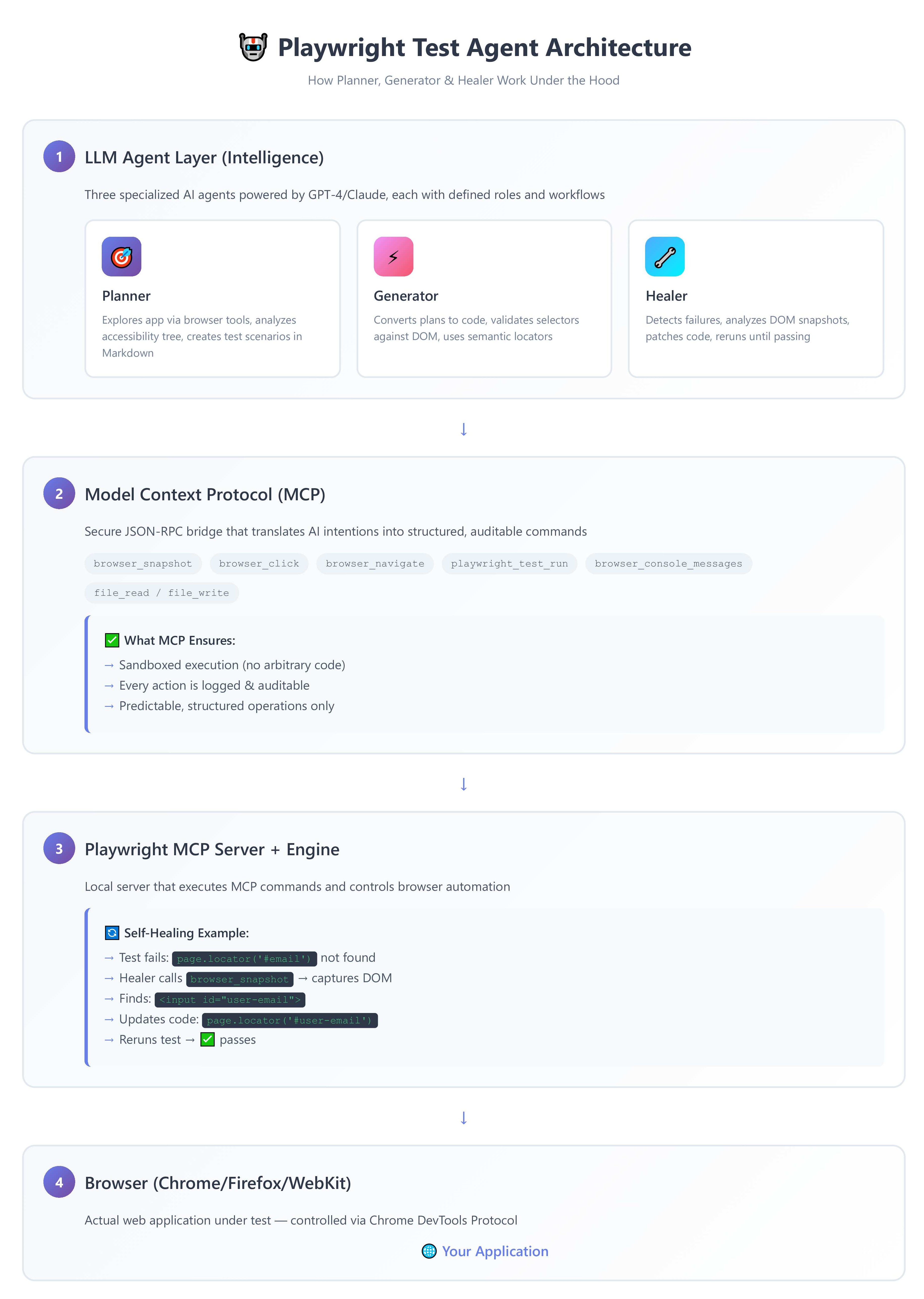

Visual overview

Meet the three agents

Each agent has a clear and limited responsibility.

Planner

- Explores your application

- Writes test scenarios in Markdown

Generator

- Converts scenarios into runnable Playwright tests

- Uses resilient, semantic selectors

Healer

- Detects failures

- Analyzes the DOM

- Patches broken tests

- Re-runs them automatically

This isn’t hype.

It’s also not magic.

Can we rely on them 100%?

No — and that’s perfectly fine.

You still own:

- Test strategy and architecture

- Business validations

- Edge cases and test data design

For regression suites and smoke flows, however, this is a real productivity shift.

How it works under the hood

This capability exists because of a three-layer architecture.

1️⃣ Model Context Protocol (MCP)

MCP is a secure JSON-RPC bridge between LLMs and Playwright.

The AI invokes structured tools such as:

browser_snapshot(DOM + accessibility tree)browser_click/browser_navigateplaywright_test_run- File read and write

✅ Sandboxed

✅ Auditable

✅ Predictable

2️⃣ Playwright MCP Server

Runs locally and enforces execution boundaries:

LLM → MCP → Playwright → Browser

← DOM · logs · results ←

This ensures the AI never operates outside defined limits.

3️⃣ LLM agent layer

Each agent is driven by version-controlled prompt scripts:

planner.chatmode.mdgenerator.chatmode.mdhealer.chatmode.md

These files define:

- Allowed tools

- Execution workflows

- Decision logic

Nothing is implicit. Everything is reviewable.

Self-healing example

A test fails:

page.locator('hashtag#email') not found

Healer flow

- Captures DOM snapshot

- Finds

<input id="user-email"> - Updates selector

- Re-runs test → ✅ passes

Zero manual debugging.

All through MCP tool calls.

What this means for test engineers

Your role shifts significantly.

Before

- ~70% scripting

Now

- ~70% test design and strategy

AI handles:

- Boilerplate generation

- Selector drift after refactors

- Common flakiness

You focus on quality decisions that actually matter.

Current limitations

Struggles with

- Complex conditional workflows

- Custom business validation rules

- Visual regression testing

- Performance and security testing

Excels at

- Standard CRUD flows

- Form submissions

- Navigation scenarios

- Post-refactor selector updates

Final thought

After exploring MCP servers in earlier posts, this is the real payoff.

AI that doesn’t just write tests.

It maintains them.

This doesn’t replace QA engineers.

It elevates our work from code maintenance to strategic test design.